Right, so you definitely can find more efficient ways to move the phase of the OSC.

One common way to do it is by pre-generating the waveform into a table(array), and then just interpolating different phase positions depends on the pitch (detune) without ever moving the items in the (now static) OSC.

Let’s go back for a moment to AudioBuffer

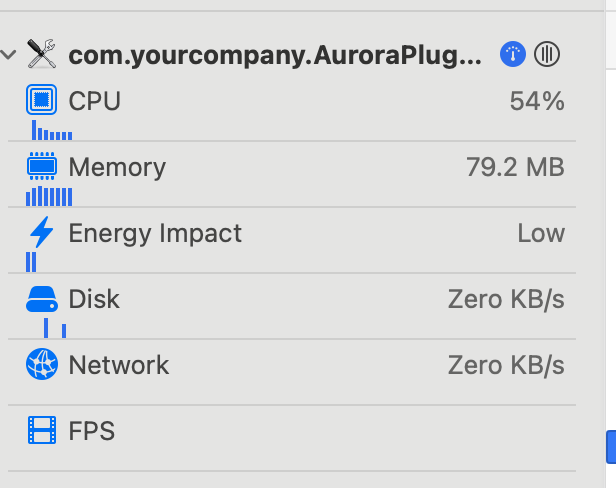

Recently I had the issue with Hight CPU load in GarageBand (iOS): (SOLVED) iOS GarageBand - Help with High CPU load. Everything is fine with Cubasis - #2 by vtarasuk

As it turned out, the replacement of “AudioBuffer” to “std::array or c array” helped to drop the CPU from 300% cpu to 47-54%.

Today I decided to still experiment a little, since I want my application to use the dynamic buffer length and various channel sizes instead of fixed.

And here is a strange observation. When I allocate AudioBuffer on “static”. The CPU is still up to 300%.

Pseudo code (CPU: 300+ %):

class Voice {

public:

void prepareToPlay(int channels, int samplesPerBlockExpected) {

audioBuffer_.setSize(channels, samplesPerBlockExpected, true/false, true/false,...); // <<<-- init/reinit of our buffer is here

}

inline void processBlock(AudioBuffer<float> &outputBuffer) {

auto *p = voiceBuffer_.getArrayOfWritePointers();

p[0][n] = // some sound for the left channel

p[1][n] = // some sound for the right channel

}

private:

AudioBuffer<float> voiceBuffer_{}; // <<<-- init of our buffer is here

}

And I decided to also try to just initialize the buffer right in the stream. Surprisingly, it worked and I got the same results as with fixed “c array”

Pseudo code (CPU: 47-54%):

class Voice {

public:

void prepareToPlay(int channels, int samplesPerBlockExpected) {

channels_ = channels;

numOfSamples_ = samplesPerBlockExpected;

}

inline void processBlock(AudioBuffer<float> &outputBuffer) {

AudioBuffer<float> voiceBuffer(channels, numOfSamples_); // <<<-- init of our buffer is here

auto *p = voiceBuffer.getArrayOfWritePointers();

p[0][n] = // some sound for the left channel

p[1][n] = // some sound for the right channel

}

private:

int channels_;

int numOfSamples_{};

}

According to this I have a few questions:

- Does it mean that the buffer in last example will be initialized on “stack” or it’s still on “heap” ?

- Do I need to deallocate it somehow? I don’t see any memory increases even after 10-15 min of work.

- Is this even a good practice to initialize AudioBuffer this way on Stack in realtime thread? (not sure how it work under the hood)

p.s. As I mentioned, this is only a problem with GarageBand. In cubase or standalone app, for example, both of realizations give me a low load (47-54%) By low of course I mean when all voices are on, all oscillators, unisons, modulations and oversampling 4x.

Thank you.

First question when you deal with performance and when you throw CPU % into the ring: did you measure a release build? Ideally with optimiser turned on?

There is a good chance that your examples show no difference in an optimised build, because the indirections (setSample() or similar stuff) will be optimised and produce the same assembly.

Secondly the systems CPU meter is not really usable for proper profiling, since it measures overall load, so it might be affected by other processes. So it is only useful as coarse qualitative data, not quantitative.

Your questions:

- The

AudioBuffer<float> voiceBufferis put on the stack, BUT internally it will allocate space for the samples on the heap. AudioBuffer is basically a RAII class for audio samples, similar to std::unique_ptr. So it is not realtime safe. - You don’t need to deallocate it (again RAII), because when the AudioBuffer goes out of scope it will deallocate the sample data.

- So no, it is not suitable for realtime. It might be well for some time, as long as allocating memory goes uncontested from other threads, but suddenly and with no chance to reproduce it might fail.

@daniel Thanks for answer. I use only release mode for such tests.

My God. I did stupid typo all this time. In the PluginProcessor.cpp I set

synth.prepareToPlay(25, samplesPerBlock, float(sampleRate)); i.e. 25 channels  . I just replaced with 2 channels and got CPU 55-58% That is more for about 10% in case with c array.

. I just replaced with 2 channels and got CPU 55-58% That is more for about 10% in case with c array.

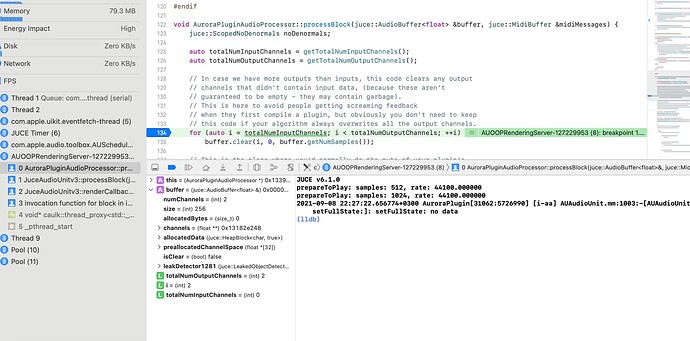

Anyway I see some strange behavior during app is starting. In the console I see:

JUCE v6.1.0

prepareToPlay: samples: 512, rate: 44100.000000

prepareToPlay: samples: 1024, rate: 44100.000000

But when debugging… the main buffer in processBlock() is actually 256 samples. Is that correct behavior? Isn’t that the samplesPerBlockExpected should match with the size what is coming from processBlock() with prepareToPlay()?

No, the samplesPerBlockExpected in prepareToPlay is the maximum buffer size you are expected to handle in processBlock. The processBlock call buffer sizes can be anything from 1 sample up to that maximum, and perhaps even in pathological cases 0.

@xenakios thanks. I thought it might differ by a couple of samples, but didn’t know that even can be 4 times.

The code you posted defines an AudioBuffer called voiceBuffer, but the next line of code uses voiceBuffer_, a member of the class. What’s the local buffer for?

@HowardAntares, sorry it’s actually typo, I fixed to “auto *p = voiceBuffer.getArrayOfWritePointers();”

despite the fact that I used 25 channels (my stupid mistake). I see the difference in performance between the code when I allocate in realtime thread and the code when the buffer is locally initialized initially.

Here is when I allocate buffer in realtime thread:

Here is when I allocate buffer locally and then set the size in prepareToPlay

As it is very strange. It seems that during a thread, the system allocates a possibly better piece of memory than when the program is started.

My 2 cents here: I wouldn’t use that approach to handle unison. I would have my synth engine able to play a defined number of voices and a predefined maximum number of voices for the unison.

I will have my synthVoice class having methods to set coarse and fine tuning, panning and so on. In this way I can change these values from the synth engine. When the synth engine plays a voice, it’ll read the unison parameter and, then, fire a note play for the additional voices with the proper tuning/panning/volume and so on.

In this way my synthVoice class won’t have to deal with much branching and should be fast.

Also, for the AudioBuffer: unless you are not creating a synth that must work with more than 2 audio channels, just allocate 2 channels in prepareToPlay and get the pointers you need without calling getArrayOfWritePointers. Get write pointers for two channels, then deal with the channel configuration in your playback loop.

I know that modern coders will look me bad, but if you already know what type you are dealing with, just avoid using auto. To me, this will make the code more readable.

One last thing: NEVER EVER allocate in processBlock. You are dealing with a real-time process and allocations are a very bad thing to do, especially in that place.

My 2 cents

Luca

lol. this way I now get 37-39% stable. But as soon as I use setSize(…) the load gets from 54% to 66%

void prepareToPlay(int channels, int samplesPerBlockExpected) {

delete voiceBuffer_;

voiceBuffer_ = new AudioBuffer<float>(channels, samplesPerBlockExpected);

//voiceBuffer_.setSize(channels, samplesPerBlockExpected, ....);

}

@lcapozzi Thanks. sure, I don’t even planned to allocate AudioBuffer in realtime thread. These are just observations, trying to understand why there is such a strong difference between c array and AudioBuffer that is allocated at the start of the program.

Regarding the unison: This would work well if you were playing polyphony. My unison should work even with “mono”, so when I hit 1 note, I hear 1-16 waves with slightly different freq and And panorama through different channels. So, each oscillator has the ability to reproduce up to 16 voices and you can additionally press 16 notes. Which mean 4OSC x 16Unison x 16Notes x 4Oversampling = i.e. actually 4096 voices in total.

(The same unison actually has a Serum synth, but with two osc’s)

That is the worst option of all:

AudioBuffer is specifically created to be a lightweight object, that manages the lifetime of sample data on the heap.

It makes no sense to allocate the lightweight object on the heap as well.

The problem with allocating and setSize in the processBlock is not, that it is expensive and you would see it on the CPU meter. Like I said before 99% of the time you won’t see it on the CPU meter at all.

The probelm with heap allocation is, that the heap memory management is per process, so all threads in that process need to synchronise when they want to allocate or deallocate memory.

When threads synchronise, you will inevitable get the situation, that a high priority thread has to wait for a low priority thread. This is called priority inversion: the high priority is handled after a low priority thread.

Now even if that low priority thread is fast with allocating, Fabian and Dave point out in their realtime 101 that the OS is allowed to put the low priority thread to sleep to handle another thread. Now your realtime thread waits for a sleeping thread!

And if you want to allocate anywhere, don’t use new and delete! This is how you wrote C++ 20 years ago. Use RAII wrappers for everything to be safe from leaking and have lifetime sorted for good.

@daniel no, no. all is right (it was just my tests/experiments). I know about the cost of Heap memory. That why I want to preallocate buffer in the prepareToPlay(). But so far I have not been able to achieve the same 37% cpu. As you can see above, deleting and re-creating the buffer works better for me than setSize() I checked 7 times for sure  the result was 37-38% in all cases. But as soon as I use setSize, it’s again 54-66%.

the result was 37-38% in all cases. But as soon as I use setSize, it’s again 54-66%.

Also I don’t think this is an error of Xcode profiler, because I also tried to press more than 16 voices and the wheezing began precisely in case with “setSize”

The mistake is, you are not allocating in prepareToPlay, if you call setSize() in processBlock().

Have a look at the source of AudioBuffer:

You can create a subset of an AudioBuffer to keep a simple interface:

juce::AudioBuffer<float> actualVoiceBuffer (voiceBuffer.getArrayOfWritePointers(), // reference the already allocated samples

voiceBuffer.getNumChannels(), // same number of channels

0, // start at zero

buffer.getNumSamples()); // same size as the process buffer

A more modern interface for non allocating sample data is dsp::AudioBlock, but only a few juce classes use that audio interface (the ones in the juce_dsp module).

@daniel I use setSize only in the prepareToPlay(). In the processBlock() only read/write with the length that is coming from buffer.getNumSamples()

Ok, maybe I misunderstood you at some point.

I think this confused me, what do you mean with locally? I thought this meant local in processBlock().

Anyway…

@daniel sorry my bad english  By local I mean this way:

By local I mean this way:

class xxx {

private:

AudioBuffer buffer_{};

}

No worries, was completely my bad. I tried to find why I was so convinced you would call setSize in processBlock and couldn’t find it, so seems I didn’t read properly

Good luck!