So I’ve been looking to replace some operations with lookup tables to optimize cpu usage. So naturally dsp::LookupTableTransform seems a good choice for this, except it appears to not be fast in my case? I’m currently using it to replace a non integer power of 2 operation that’s called every sample, and an exponential function also called every sample.

For instance, I have the operation:

fmin(exp(2.99573227355 + 0.01 * value * 6.90775527898), (m_sampleRate / 2.0) - 500.0)

(this simply transforms a “value” of 0 to 100 to a frequency within a certain range)

I’ve since tried replacing it with a lookup table initialized like:

freqScale.initialise([=](float value) {return fmin(exp(2.99573227355 + 0.01 * value * 6.90775527898), (m_sampleRate / 2.0) - 500.0); }, 0.0, 100.0, 1000);

and called like:

processor.freqScale[value];

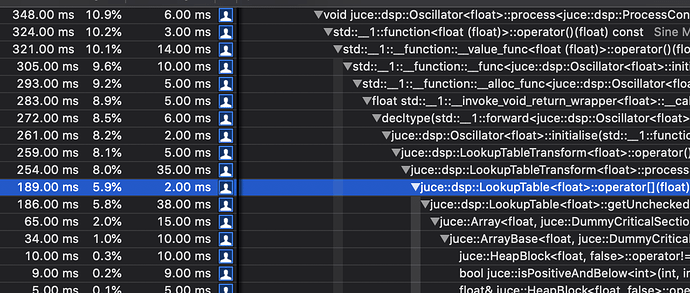

But after cpu profiling in debug mode (in vs 2015), it appears to use significantly more cpu than using the function directly - but of course I would suppose that was simply because I was running in debug mode.

I can’t look at the function directly when profiling in release mode, but eyeballing the cpu usage in Ableton with the plugin’s release, it seemed like it at least made no difference in cpu usage if not slightly increased it. Although again this may not be fully reliable as I know my cpu dynamically changes its clock speed depending on load.

So is there something wrong here? Or are operations like pow and exp just too simple to replace with a lookup table?

Cheers!