and btw, i know LunarG released their vulkan SDK for mac too Benefits of the Vulkan macOS SDK - LunarG so it might be pretty easy to port.

I looked a bit into the OpenGLGraphicsContext. You know, the underlying implementation of the LowLevelGraphicsContext.

It’s kind of amazing that, when you actually inherit from RenderingHelpers::SavedStateBase<…>, , you only have to implement a few methods to get the complete JUCE Graphics context working.

renderImageTransformed()

fillWithSolidColour()

fillWithGradient()

beginTransparencyLayer()

endTransparencyLayer()

… and so on. Just implementing the “fill with solid colour” alone makes almost all of the stroke/fill path stuff visible.

Now looking at the OpenGLGraphicsContext internals I noticed there are a few shaders declared there.

SolidColourProgram solidColourProgram;

SolidColourMaskedProgram solidColourMasked;

RadialGradientProgram radialGradient;

RadialGradientMaskedProgram radialGradientMasked;

LinearGradient1Program linearGradient1;

LinearGradient1MaskedProgram linearGradient1Masked;

LinearGradient2Program linearGradient2;

LinearGradient2MaskedProgram linearGradient2Masked;

ImageProgram image;

ImageMaskedProgram imageMasked;

TiledImageProgram tiledImage;

TiledImageMaskedProgram tiledImageMasked;

CopyTextureProgram copyTexture;

MaskTextureProgram maskTexture;

Initially I thought I had to implement every one of them. But then I noticed, for example, the TiledImageMaskedProgram is only used in one place.

void setShaderForTiledImageFill (const TextureInfo& textureInfo, const AffineTransform& transform,

int maskTextureID, const Rectangle<int>* maskArea, bool isTiledFill)

{

...

if (maskArea != nullptr)

{

Here the TiledImageMaskedProgram is used.

}

...

}

Furthermore this method is only called at one place…

...

state->setShaderForTiledImageFill (state->cachedImageList->getTextureFor (src), trans, 0, nullptr, tiledFill);

...

The maskArea is always nullptr!

Not only that, all the Masked programs are actually unused? Kind of surprising.

So … is it code remainder, or was there intially a plan to apply masking via shader? Not sure how to interpret this.

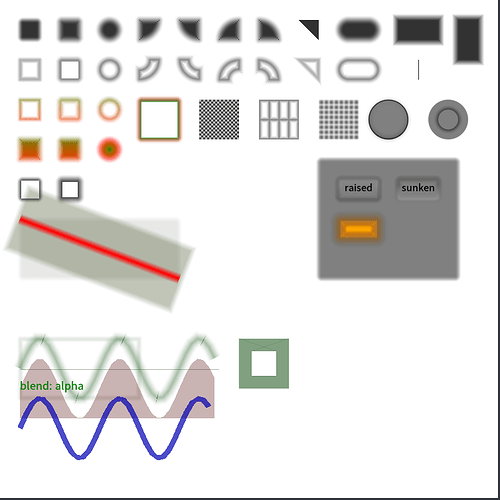

Anyway. Running the GraphicsDemo example project with a rudimentary VulkanContext, I measured the performance. I noticed its performing the worst when clipping or clip regions are used.

This brings me to the conclusion that the biggest performance impact is due to the fact that any clipping of the drawing makes it necessary to perform CPU preprocessing which leads to the EdgeTable, Scanline pre-processing in the RenderingHelpers::SavedStateBase

I guess that’s the reason why a bunch of simple rotating images are performing so badly compared to a traditional “render one transformed quad” approach. With which thousands (instead of 10) are possible at 60 fps.

Seeing this and the unused masked shader stuff, I guess initially there were plans to solve this issue? But to simplify the implementation, the software rasterizer code from RenderingHelpers::SavedStateBase was used?

If anyone looked into this, or even @jules . What are your thoughts on this?

Just by debugging it, I see there went a lot of work into the clipping / clip region / edge table code. But I somehow have the feeling that a lot of it is unecessary since it was initially only intended for the software rasterizer. And some of it can be solved by using a mask texture, or perhaps the stencil buffer in OpenGL / Vulkan.

In summary I have to say: Using Vulkan is not that hard if you overcome the inital setup. At the moment it seems like there is a fundemental problem with the complexity of the clip regions. And most of the work is not Vulkan related, but preparing the paths and regions for ANY kind of GPU rendering approach.

The longer I look at it, the more it becomes clear:

The method of how paths are rasterized in JUCE is probably the easiest compromise to draw anti aliased geometry.

It’s not the best performance. Yes, but on the upside you get a high quality AA without using an overly complicated geometry triangulation + clipping + distance field method. AND it’s not dependent on the GPUs MSAA capabilities.

Now if that’s still not enough for you, here are a few papers and resources for a possible implementation:

At first this looks like a nice approach. But the problem I see here is the questionable quality. Not sure if it’s enough for UI vector graphics, looks very blurry to me. I mean, if it’s for a game with many moving vector entities it’s not that important. But for crisps UIs, sharp looking fonts and lines are mandatory!

Another problem: The method is only for lines? It’s probably somehow possible to apply it to a filled shape. Or the boundary. Although not trivial.

Using the stencil buffer this technique is almost magical. Seems like a very general solution for solid shapes with uniform fill colour. Unfortunately it doesn’t really solve the AA problem and relies on multi sample AA of the hardware and is very hacky in regards to the stencil buffer.

Here signed distance fields are used. I think normally they are precalculated to allow something called “vector textures”.

Valve did something similar for font textures (PDF):

https://developer.amd.com/wordpress/media/2012/10/Green-Improved_Alpha-Tested_Magnification_for_Vector_Textures_and_Special_Effects(Siggraph07).pdf

Unfortunately the pre-calculated condition is a bit problematic. In the second article it’s calculated on the fly, but it seems too specific for a general polygon/path fill method. In this case it’s for a rounded rectangle and seems a bit hacky.

This is by far the most math/geometry heavy article. But also the most promising one. Maybe some of you heard of it as the Loop/Blinn method.

Seems like a generalization of the previous distance field stuff.

In 25.5 : […] antialiasing in the pixel shader based on a signed distance approximation to a curve boundary.

Ref: Resolution Independent Curve Rendering using Programmable Graphics Hardware

https://www.microsoft.com/en-us/research/wp-content/uploads/2005/01/p1000-loop.pdf

Now, as you can see there are quite a few resources. The problem is: It’s hard enough to implement them for a single kind shape. Additionally to that it would be necessary to incorporate the JUCE clipping regions. Especially “clip to alpha image” and “clip to path” is problematic.

Considering all of this, I think there are two viable options:

First

Implement a general solution for the JUCE LowLevelGraphicContext implementations using:

a.) Polygon Clipping: Clipper Lib (C++) http://www.angusj.com/delphi/clipper.php

The input is a JUCE Path. It can be flattened into linear line segments into a simple polygon object. Then the context clipping regions can be applied.

b.) Polygon Triangulation: poly2tri (C++) https://github.com/jhasse/poly2tri

Sweep‐line algorithm for constrained Delaunay triangulation.

The clipped polygon objects are converted into a list of triangles. Necessary to avoid all kinds of special cases and to simplify the shader and vertex data creation.

c.) Loop/Blinn method: ??? It was written in 2005. Where on earth are the implementations ???

Yes, unfortunately I didn’t find a concrete implementation. Is there a reason for that?

In the end it’s a bunch of GLSL shaders with custom vertex buffers and a few uniform parameters > that provide the shader with the curve / stroke / fill parameters.

If all of this sounds like a crazy undertaking. Well …

Second

Use the existing JUCE CPU scanline rasterization. Leave the JUCE / OpenGL / Vulkan context implementation as it is.

So how do we get better performance then? Maybe a simpler approach is to build a set of commonly used special implementations. e.g.

a) Problems with CPU intensive path rasteritation for your waveform display? Well it’s 1D data!

How about a GPU shader that gets the 1D data as vertex attributes and fills it in a fragment shader?b) Problems with rotating images performing badly?

How about a simple quad shader that uses hardware dependent MSAA. Alternatively a super sampling offscreen render of the image for AA.c) Problems with complex SVG rasterization? Well it’s static data. How about precalculating a Vulkan Command buffer, instead of rebuilding it every frame. So drawing SVG images is just a matter of managing and submitting cached data.

Just a bit of ramling and ideas. If you have any resources or ideas for the mentioned problems … curious to hear them ![]()

I use SDF shaders to draw anti-aliased basic shapes such as disks, rounded rectangles in my engine and it works wonders. You also get the ability to have blur / shadows basically for free.

For arbitray paths I started experimenting on a 2-pass technique where you first draw the interior (aliased) of the poly, then you add AA fringes. You have to send an orientation vector to the shader also so you know where you are in the shape. Haven’t gotten round to finish it yet as other priorities got in the way.

I recommend reading Inigo Quilez’s excellent articles on signed distance functions, such as this one

An example of this technique:

With some blur (no separate filter pass, straight in the shader):

Looks interesting

I Want to mention this: In my previous post I considered the Loop / Blinn method. I now figured out that this method is apperently patented by Microsoft. This explains why there is no open source implementation. Fortunately it is not necessary anyway! Only if you want to directly provide your shader with quadratic and cubic path data.

If the path is flattened and something like SDF on linear segments is used instead, it could be easier to implement. Unfortunately I have no idea how. So in your method the SDFs of specific shapes are used. Does this mean you have specific calls and shaders for every signle variation of shapes?

I’m in search for a general method that can be applied to linear line segments, so that in the end juce::Path can be used for everyhing. This would make things a lot easier but is also necessary since the juce::Graphics use clipping regions. The only requirement: Everything is anti aliased. Something like this:

Path p = ... // rectangle, ellipse, polygon, line, anything !

Path clipRegion = ... // Graphics clipping regions

p = flattenPath(p); // Convert quadratic, cubic segments to linear

p = clipToShape(p, clipRegion); // Clip any shape to clipping region (Clipper Lib)

triangleData = polygonToTriangle(p); // Triangulation (poly2tri)

vertexData = prepareShader(triangleData); // Calculate SDF Vertex + Shader Data

Do you think this is possible purely with SDF?

I Want to mention this: In my previous post I considered the Loop / Blinn method. I now figured out that this method is apperently patented by Microsoft. This explains why there is no open source implementation. Fortunately it is not necessary anyway! Only if you want to directly provide your shader with quadratic and cubic path data.

The patent expires in about 5 years if you can wait ![]()

Seriously though the disadvantage of Loop-Blinn, afaik is that it computes everything in the cpu, which then require uploading a texture, which is not going to be the most efficient.

There’s also this very nice NVidia proprietery GL extension which can render arbitrary paths on the gpu, but obviously this is not a solution you can use in a plugin context as you would limit your userbase.

Maybe some day something like OpenVG will catch on.

So in your method the SDFs of specific shapes are used. Does this mean you have specific calls and shaders for every signle variation of shapes?

That’s right, it’s what makes it fast by offloading most of the work to the gpu, which is designed explicitely to handle this kind of work. Most UI’s make heavy use of simple primitives anyway.

Should you want to optimize further you can use techniques such as batch sorting and instanced rendering in order to minimize draw calls.

I’m in search for a general method that can be applied to linear line segments, so that in the end juce::Path can be used for everyhing.

That would defeat the whole purpose of the SDF method where you make use of prior knowledge of the mathematical shape (its implicit equation).

I think the general assessment is that OpenVG is dead, since the consortium is not actively working on it.

Read about it : NV_path_rendering. It’s out of question too, since it’s locked to NVidia.

The most efficient is rare anyway, there is always a compromise between flexibility and performance.

I mean, it’s also possible to rasterize and cache a JUCE path in a Vulkan command buffer. In the end it’s just one draw call and some uniforms. But then it’s a static object, which excludes stuff like waveform rendering and dynamic shapes. Also it would be necessary to pull the batch / cache mechanism out of the context. The normal component Graphics rendering and paths wouldn’t profit from that.

Draw calls, texture uploads or quads per pixel/scanline aren’t the problem at this point. You can throw much more at the GPU. The bandwidth use compared to a modern AAA title is laughable. The problem is the intensive single threaded EdgeTable, scanline, clipping and path rasterization on the CPU.

If anything of it can be avoided, it would give a massive boost.

Measuring the GraphicsDemo, the main bottlenecks are paths and complex shapes (SVG). Anything that increases the complexity of clipping regions and the EdgeTable. Which are only necessary because we wan’t the AA quality.

About the specialized SDF shapes. I understand the purpose.

Yes, if we call something like g.fillEllipse(x, y, width, height); , then we could create a virtual “EllipseObject”, which ultimately selects the ellipse SDF shader. Most of the time the object to be drawn lies within the context clip region, so we can pass the simplified geometry data 1:1 without further processing.

BUT as soon as there is a clipping region involved, the ellipse object will intersect with rectangles or paths. For simple intersections like rect2rect or circle-rect we could spawn or split them into simple SDF objects. But in reality it’s required that we can clip any shape. So yes, in the optimal case the context should choose a specialized SDF equation (Rect, Rounded Rect, Ellipse, Star). Even if, it’s still necessary to have a fallback for general paths and polygon shapes.

If it was just the shapes without AA, no problem. There are enough triangulation methods out there.

The problem is really the combination. Any polygon shape + anti aliasing + clipping regions.

At the moment I fear it’s not possible and that specialized shaders are much more profitable than optimizing juce::Graphics.

The good news: It’s easy to specialize and cache with Vulkan, since it’s multi-threaded and no context switching like in OpenGL is involved.

They released 1.1 of the spec in 2020, so it seems they are …

Of course I read about it, that’s why I said ![]()

obviously this is not a solution you can use in a plugin context as you would limit your userbase.

Remember not everyone has the latest RTX card on their rig. We’re targeting audio folks, not gamers, they might be running an iGPU on a laptop.

Anyway it’s pretty meaningless to compare things without a proper benchmark on a real-world scenario.

And in any case there’s no one-size fits all solution, if you want complex clipping, blending etc you could use something like this highly optimized cpu renderer blend2d.

It seems overkill to use the cpu for everything though as most UI’s use simple shapes that can be rendered directly on the GPU. A mix and match approach would probably be best to balance performance vs flexibility.

Sorry, forgot the “I”. I read about it. I also remember reading that Googles Skia is using it. Not sure if this information is up to date though. If it was easy to implement, it could be a good “optional” implementation to boost things if the hardware is available. I somehow fear that at the end, using it “under the hood” in a JUCE graphics context will result in the same problems. Clipping regions and so on.

Oh, interesting. Although it’s 1.1 of OpenVG Lite, since OpenVG 1.1 was released in 2008. Wow, what happened in the meantime? I don’t think a released spec can be equated with “widely used solution”. Most comments I read gave the opinion that it’s essentially dead. From the looks there isn’t happening much with it. My hopes are not that high. I haven’t read much about it, but where would I start to get it into JUCE?

I don’t know. From audio folks I expect a workstation and a middle class GPU, or a laptop for sketching ideas. If a producer uses a laptop for his main prodution, he shouldn’t expect 60 fps and no fan movement. The same as a gamer can’t expect 4K graphics without necessary equipment. Developing for the lowest possible denominator will ultimately harm your product. Should we use aliased curves and fonts because some Apple customer uses a Macbook from 2010 for his production? No way : )

But that’s not the problem. Even if it’s an integrated graphics chip. It’s far better to use a specialized chip than wasting CPU cycles.

I don’t really expect a one-size fits all solution. But what I expect is a GPU solution. And if the user doesn’t have the required hardware, then it’s always possible to tune down the fidelity. PC Games do this for years. Turn off anti aliasing. Turn off texture sizes. Turn down the resolution. Turn off the amount of VRAM used. Then you can play Minecraft on a crappy laptop : D, which is still an order of magnitude higher GPU use than a simple 2D UI.

The benchmark in juce::Graphics case is simple.

- Static Images

- Transformed Images

- Stroked Paths

- Filled Paths

Any filled primitive that is clipped will result in a filled path. This is the reality for every JUCE application. The only difference from case to case is how intensive the parts are used.

From a modern C++ graphics API I expect at least the performance of a browser canvas API. Even a mobile phone renders quicker than the JUCE graphics API. They improved the implementation over the years (Android using SKIA / Vulkan), while juce::Graphics is idling, claiming: Everything fine here! You can’t expect more.

Anyway I implemented an OpenGL and Vulkan context in a real-world scenario. An audio plugin using images, font glyph rendering and waveform paths. It will always come down to the EdgeTable preprocessing of the CPU. Don’t know what else we should compare.

Seems obvious. Move the rasterization part to the GPU. They are built for it.

I found this entertaining thread from 2011.

Apparently @jules was facing exactly the same problem. It fundamentally comes down to

rendering anti-aliased polygons from a path.

He got quite pissed, people kept posting the same ideas that he already tested. A funny read : D

So from this experience I essentially take the following:

-

Hardware implemented MSAA for vector graphics looks like crap.

-

Optimizing or caching a whole stack of drawing operations is not really feasible, since it’s layered 2D graphics.

-

OpenGL performance suffers from framebuffer switching.

-

For small paths it was not clear if the triangulation costs more than the edge table calculation.

I don’t want to tear up old wounds, but he tried one idea that I haven’t considered before.

Anti-Aliasing via accumulation buffer. To quote the coarse idea:

He got it almost working, but performance was very bad. The problem was that binding / switching frame buffers was very expensive. It might be worth testing the same method in Vulkan.

Why? For once: Framebuffer switching is different.

Vulkan uses render passes and could utilize a sub or multi-pass solution and a mask shader with a more specialized format for this purpose.

And then there is instanced rendering. While the OpenGL version JUCE uses doesn’t allow instanced rendering and it would be necessary to render the same geometry with multiple draw calls, in Vulkan we can draw it instanced with different jitter uniforms or push constants to achieve anti aliased 2D geometry in one call.

I’m not sure how good the “jitter polygon into mono mask” method will look. But it’s worth trying. Seems a lot more general than specialized methods for each shape. And the results are tweakable, the AA quality depends on the amount of draw calls of the polygon.

Did anyone ever try something similar to anti-aliasing via accumulation buffer?

Also @jules, I know you are busy with SOUL, but if you have any input on this topic I would much appreciate it!

I think the fundamental way Juce renders things need to be changed - eventually ! !

!

Can you brute force anti-alias by rendering at high resolution and then reducing it down to present it, or ‘spatial anti-aliasing’ as I think it’s known.

In the meantime it’s good to know you’ve actually got Vulkan working at all.

All I need is a area to render to and I’ll be set…

I guess you’re not going to share your triangle renderer?

To get nice aliasing you’d have to scale the image up 8x in each direction… to get 256 levels of grey between black and white. That would need far too much memory and be very slow even on the GPU. If a lower “oversample” factor is used, steps become visible on tilted lines.

When I looked into drawing 2d paths with OpenGL a few years back, I found an interesting technique used by MapBox:

https://blog.mapbox.com/drawing-antialiased-lines-with-opengl-8766f34192dc

Not sure whether that’s patent-free, but quality and performance are obviously great as MapBox was written for older mobile GPUs. They host a few webgl demos. It uses a combination of normal vectors added to the vertices and a pixel shader to do the antialiasing on the pixel shader.

This is SSAA and the simplest form of MSAA. While for games it gives acceptable results, for vector graphics 8x is not even close to the quality the current JUCE renderer offers. While doing tests it seemed at least 32x is necessary to give acceptable results.

Yes, using a bigger framebuffer is just the first thing that comes to mind, but for various reasons it’s a very bad choice. There are many other AA techniques. One of them is some form of accumulation buffer. The geometry gets shifted by “subpixels” and will be accumulated, whichis one way of doing MSAA. I tested this with instanced rendering in Vulkan.

The big advantage: The submitted geometry stays the same. No additional memory for the huge framebuffer.

The problem: It needs two passes. One for the accumulated AA mask, one for the colour/texture/gradient sampling and blending.

Here is the result: At 1280x720 with 150% windows scaling (1920x1080). It’s the path rendering example from the JUCE Graphics demo. With 32x AA comes close to JUCEs scanline renderer.

Here a view of the actual geometry from RenderDoc. You see the draw call vkCmdDrawIndexed(3519, 32) … uploaded are the vertices and drawn are 3519 indices. Times 32. While this seems much, it’s still in the acceptable range compared to “one quad per pixel”.

Since this is a two pass method, we also need to draw the mask and apply the actual “fill”. We could draw the mask with one quad, but this will draw a lot of transparent pixels and we want to reduce the fill rate, so we draw a simplified “outline” of the polygons. The outline needed 2211 indices. This time only drawn once.

Here is the intermediate result of the AA mask buffer zoomed in by 800%. Quality seems good enough.

By the way, for the MSAA, a non uniform pattern was chosen. Supersampling - Wikipedia

The pattern was generated viad poisson disk sampling.

Now while this sounds promising, there are unfortunately a lot of problems:

First, the raw process is this:

-

Flatten the juce::Path with juce::PathFlatteningIterator. This way the poly lines can be passed to the clipper.

-

ClipperLib uses the lines and applies the clip region of the current context. This converts the path to a PolyTree, necessary to resolve any self intersection and the winding order stuff. Essentially you get a tree of contours that are either outlines or holes.

-

Mapbox Earcut GitHub - mapbox/earcut.hpp: Fast, header-only polygon triangulation

(poly2tri was too slow and didn’t give acceptable results) is used to perform triangulation. The result is a list of vertices and the triangulation as an index buffer. This can be directly uploaded to the GPU.

Now the problems:

-

Clipping per frame is slow! There are also some worst case scenarios (Rectangle List with many independent lines). Where the clipper got completed stuck, probably due to O(N²) complexity.

-

Triangulation per frame is slow and even for small shapes, the overall amount of triangles is still relatively big.

-

It’s necessary to have an accumulation framebuffer for each “object”. Which increased memory use quite a bit. Not as much as naive SSAA, but for many small objects, this will probably use up a lot of memory bandwidth.

-

I can’t find a way to perform AA mask rendering and filling of the end result into one renderpass. This requires the intermediate mask framebuffers to stay in memory until they are merged at the last step.

I’m sure there are a few ways to optimise things here and there, but I’m not satisfied with the result.

Let’s not forget the initial goal. Reduce CPU preprocessing and utilize the GPU. Unfortunately all the clipping and triangulation are performed on the CPU too.

My assessment: For bigger objects and resolutions, this method could outperform the scanline OpenGL method. But for small glyphs and many objects (especially fonts), the performance will probably end up worse.

New Idea:

Reconsidering this, the bottleneck part of the OpenGL scanline method is not the amount of triangles. GPUs can easily handle this.

It’s the pre-processing and submission. There are 3 parts and I think it’s possible to move some of the work to the GPU.

- juce::Path gets flattened by juce::PathFlatteningIterator.

- Paths get converted into a juce::Edgetable, clipping and more is applied.

- juce::EdgeTable is iterated (via scanline) and vertices (pixel quads) are generated.

The first thing that came to my mind: The scanline is applied per Y span. Isn’t it possible to multithread this? Probably. Now if it can be multithreaded, can’t the GPU do this job?

Compute shaders to the rescue!

From what I get it should be possible to upload the juce::EdgeTable data structure directly into a Vulkan memory buffer. From there a compute shader can be dispatched that can run the scanline iterator in parallel and write the generated vertices directly to a device local vertex buffer. This will not only reduce the time spent on CPU, it also avoids the vertexbuffer uploads completely!

The same goes for the Path to EdgeTable conversion, although this process is not trivially converted into a parallel algorithm.

That was all for now.

Sorry, this is still too much work in progress. At this stage it’s problematic to share things due to various questions about licensing and stuff.

But what do you mean by changing the fundamental way? I thought about this and came to the conclusion that no matter what, you always end up at the same problem. And the more I read into it, the more I see that this is actually a rather open problem that has no clear answers. Even big projects like Skia struggle with this, but found a good method for their purpose.

If anyone has some insights about Skia btw, feel free to share!

Indeed. That’s why I wrote 8x each direction… meaning 256x overall for perfect results - which makes this approach useless.

I put up the link to the MapBox blog because it seems to have all the techniques that would be needed for truly fast vector rendering. The fact it runs at 60fps on mobile with complex maps means they had to do everything they could to unload things from the CPU to the slowish GLES GPUs using vertex and pixel shaders and as few draw calls as possible. There is a c++ version of the rendering engine:

It renders fonts and seems to support gradients and is well tested. It might be a nice source for inspiration if you guys want to rewrite the JUCE renderer - too bad it’s based on OpenGL and not Metal/Vulcan.

GPUs like to render large areas at the same time. They don’t like halting in any way, and they really hate rendering in pixel strips!

Also, if you should aim to use as few ‘draw calls’ as possible, or whatever vulkan uses, the better. I was hoping that would change, but I don’t know vulkan at all so I can’t comment.

But I’d bet that idea of “here’s a bunch of polygons, go render it” will still be the best route to take. The parallel nature of GPUs is outstanding.

I’d wager that you could draw an entire plugin, with thousands of polygons in a few milliseconds.

It’s just a matter of trying it. My Barchimes plug-in rendered the entire interface at once, and that was on the first Mac Mini running smoothly inside Logic, over 10 years ago now.

I haven’t updated it to 64 bit yet, as it’s not my best seller, and I was stopped by Apple’s news about dropping OpenGL)

I see what you mean by rendering a large off screen buffer, it would take up a bit of memory, some people may not have, possibly.

Rendering a strip of quads to draw lines is a pretty standard thing to do for decades. If you use ‘smoothstep’ or equivalent. you can make the lines as smooth as you want, no matter the resolution. The quads just have to cover the area of the line. And if you’re using SDFs like someone already mentioned, it’ll be rendered using a shader rather than a pre built line texture, which gives you maximum control over the line and its geometry.

I mentioned sharing because I feel we’re all sitting here with bated breath waiting for Juce to offer a head start as an official develop Juce release. I don’t think anybody will judge your code - it’s brilliant you have the time to look into it to be honest.

My bad, I misread it as 8x AA.

You probably overlooked it due to the amount of text, but I posted the link in December 2020 together with another SDF technique. So I’m aware of mapbox-gl and read the article too. I wish it was that easy! Unfortunately the SDF + line segment techniques are not suited for the task.

To summarize it for all… We submit 2 vertices per line point. 4 per line segment. Extrude the segment in the vertex shader via uniform values to get a quad and then use signed distance fields in the fragment shader to “blend” the edges of each segment. So far so good.

There are a few things we have to consider here:

It’s for line segments only! Sure, there are other SDF shapes, but all of them represent only one geometric shape. To get “any polygon” shape, which juce::Path requires, we have to chain or merge the methods. For line segments this is easy, as shown in the article. But what about fills? We can’t submit an N sided polygon and just apply SDF magic, because then we have to consider each vertex for each pixel (because of winding order), which is very expensive! The question about clip regions is also not solved.

Now you could assess: But the maps use fills too!

I’m not sure 100% if this is correct, but looking at the maps it seems they achieve fills by just using a regular aliased fill and just overdraw an AA outline. This works for maps. Because map outlines do not intersect, and if, they are not transparent. So we can fake polygon fills with AA outline + regular aliased fill layer. This will not work for gradients, textures, transparency or blend modes. It’s still possible to achieve transparent fills with maps, but only if a “whole layer” uses opacity.

Which would require a separate renderpass/framebuffer. And due to the nature of the dynamic JUCE graphics API we can’t cache this like a map viewer does.

I really can’t see a performant and easy solution because the JUCE graphics API dictates dynamic self intersecting path shapes per frame with clip regions, opacity and fill.

Let’s not forget that even if the SDF rendering performance is superb - the setup is probably not. Maps are static and can be cached to a certain degree. So I think the usefulness of the SDF shapes in this case is a bit misleading. Because we can’t apply the same preprocessing and have to calculate it on the fly for each call.

Yeah, I don’t doubt it. If I had the task to achieve this, I would specialize and use a method that fits the requirements. In your Barchime for example. If we just put a transparent outline around the individual chimes graphics we don’t need AA. We can just draw and fill one quad for each element, which is insanely fast. 60 FPS on 4k guaranteed.

But doing the same just by using juce::Graphics::drawImage ? That’s another thing.

Of course we could also discuss and extended graphic API. For example something like this:

void paint(Graphics& g) override

{

if(vulkan::isSupported(g))

{

vulkan::Graphics sg(g);

if(!batch)

{

VulkanContext& context = sg.getContext();

batch.reset(new ShaderBatch(context));

batch->setAntiAliasing(..);

batch->addDrawable(TextureDrawable(.."chime.png"));

}

sg.drawBatch(batch, transform);

}

else

{

// Fallback if the context is a software renderer

}

}

In this example we could preprocess the triangulation at the setup state of a batch and make anti aliasing optional. The juce::Graphics API stays as it is and can still be used like we’re used to. The new API on top is optional and users can build their own batch/cache/drawables objects. Also notice: Even if the Image here is added at the setup state, we can still supply an affine transformation and update the texture, because both will not change the vertex attributes but only the bound shader uniforms.

What do you guys think about such an extended API? I see a major disadvantage: It’s specialization and the existing juce::Components would not benefit.

I don’t know know much about the low level details of drawing APIs.

But what’s clear to me from this discussion is that the way forward would be to add a new drawing API that would fit Vulkan/Metal/etc in terms of the data passed in and how it’s stored so it wouldn’t require any flattening and be GPU-friendly.

I would imagine, broadly speaking, something like that added to Component:

virtual void draw(ImprovedGraphics& g)

{

//default implementation, just calls paint and uses whatever mechanism exists now:

paint (g.getClassicGraphicsObject());

}

Then over time, both built in and custom JUCE components can add support.

I’m just looking for an additional 3D/2D renderer to complement Juce’s.

So I can have all of Juce’s fine and lovely software rendering for most of the static stuff, buttons and dials. And rectangular sections with animated GPU rendering for things like fast moving waveforms and FFT visualisations - and other things we all use to attract customers!

Juce could take the Skia SDK seriously as a replacement. It uses it’s own backends for the hardware rendering which has taken over most if not all of it’s software rendering. It does everything Juce needs, including a very accomplished SVG renderer. It’s used to render Android, Chrome and many others on all platforms as you may know. https://skia.org/

Any way, like I said, for now I just need an additional GPU renderer as an addition to Juce’s long established system.

I forgot to mention it here. For anyone interested, the Vulkan modules are now available as open source project on github. Check it out and post some feedback if you like.

I’m looking at this now, apologies for the wait.

It’s a great speed up for general Juce graphics for me. There’s a lot of work gone into this, @parawave.

I wish a Juce team member would look at this. - it’s amazing.

The only bug I can see is that Combox’s are not drawn properly:

As you can see it’s a standard look and feel 4, when the menu button is pressed the dropdown shows momentarily, but then disappears UNTIL the mouse is lowered over it. Very strange.