I have programmed audio synthesisers for fun for 5-10 years now. For most synths, the CPU obviously works fine and no one is doing sufficiently complex programming that stresses the modern CPU speed.

However, if you enter the realm of engineering and physics where you are doing modeling based on real life audio physics phenomena (based on the best real world models of real instruments), a modern CPU doesn’t actually go very far anymore.

We are also stuck with the death of Moore’s Law where a single core today is not much better than 5 years ago. If we lived in the old days, we would have 10 GHz processors by now and maybe I would be happy with that. However, that has not occurred.

I was watching this video: https://www.youtube.com/watch?v=Y8Ym7hMR100

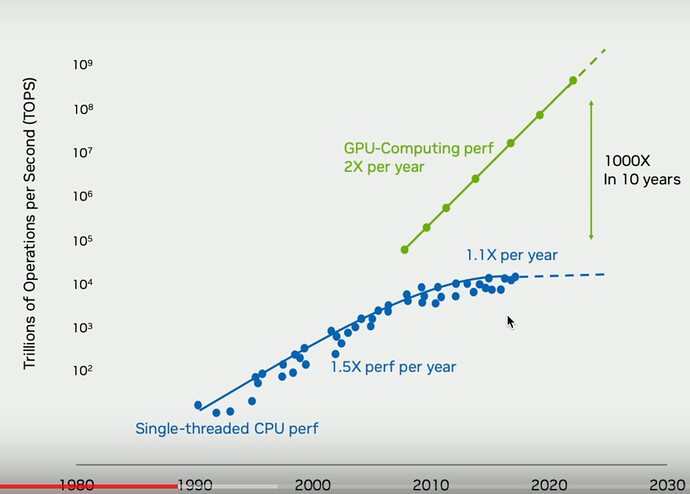

And he shows GPU vs. CPU compute speeds:

He explains that to increase CPU speed (each core) we would need to pack more transistors which is no longer possible. While with GPU we just add on more chips.

I don’t understand how GPU’s work. In layperson’s terms, what does this mean and specifically for audio? You can have a 10 or 20 core CPU, but you can only practically use one core per audio plugin because multithreading a single plugin is basically impossible (audio is linear and each sample must calculate based on the last typically).

Can a very advanced GPU serve as a “single core” to complete one linear processing thread with all its power? ie. Would “adding more chips” to GPU as he suggests benefit the linear audio plugin processing workflow in a way that more CPU cores don’t?

In theory then, could we then add 3-6 GPUs to our system and run 3-6 expensive synths on them? Kind of like how Universal Audio used to (or still does) run on PCI cards?

I am not questioning whether this is practical or necessary for the average use case, but whether it is even theoretically possible and whether you think we will see things going that route to any extent.

I see this page talking about GPU audio and they seem to suggest what I’m saying may be the case:

Does JUCE allow this and if so how? Or will it? How would programming for the GPU work compared to the CPU? How would you designate it to process there? Is this VST compatible? Or what? How does the DAW know what to do?

Thanks for any thoughts.

Addendum:

I found this nice article from NVIDIA that explains GPU vs. CPU processing a bit:

They explain that a GPU essentially “can do thousands of operations at once” and that is what makes it more efficient for things like media rendering.

Presumably then we can think of a GPU as a very very multicore CPU with good thread synchronization?

ie. Hypothetically, if you are calculating a model where you have 100-1000 iterations of a formula (each for one point of the model, not depending on each other at each timestep, kind of like how visual programs must render each pixel of the screen distinctly), then a GPU is ideal for this.

I believe in theory a GPU could calculate each sample by using its many cores, then re-synchronize for the next sample’s calculation (based on the previous finished result) in a way that a multicore CPU can’t.

Is this right?