Given the following code, I’m seeing various discrepancies (using Windows/VS2026 and VS2022):

auto testTime = [] (const Time& timeToTest)

{

const auto millis = timeToTest.toMilliseconds();

DBG (millis);

DBG (timeToTest.toISO8601 (true));

DBG (timeToTest.toString (true, true, true, true));

DBG (timeToTest.getHours());

jassert (1455635037100 == millis);

jassert (2016 == timeToTest.getYear());

jassert (1 == timeToTest.getMonth());

jassert (16 == timeToTest.getDayOfMonth());

jassert (15 == timeToTest.getHours());

jassert (3 == timeToTest.getMinutes());

jassert (57 == timeToTest.getSeconds());

jassert (100 == timeToTest.getMilliseconds());

};

For this first scenario, the output is as such:

testTime (Time (2016, 1, 16, 15, 3, 57, 100, false));

1455635037100

2016-02-16T10:03:57.100-05:00

16 Feb 2016 10:03:57

10

The problem here is that the hours I’m getting back are wrong: I provided 15 and received 10.

Edit: I’m realising this is likely due to the machine tz, Ottawa/![]() , being accounted for… It’s a bit awkward because I expected to get back what I put in due to my setting the

, being accounted for… It’s a bit awkward because I expected to get back what I put in due to my setting the useLocalTime to false.

For this second scenario - using the same time via the ISO8601 API - this is what I get:

testTime (Time::fromISO8601 ("2016-02-16T15:03:57.1Z"));

0

1969-12-31T19:00:00.000-05:00

31 Dec 1969 19:00:00

19

ISTM that the milliseconds parsing is what’s broken, specifically the fact that 3 digits appear required for millis by the API even though ISO8601 supports varied amounts of digits.

if (*t == '.' || *t == ',')

{

++t;

milliseconds = parseFixedSizeIntAndSkip (t, 3, 0);

if (milliseconds < 0)

return {};

}

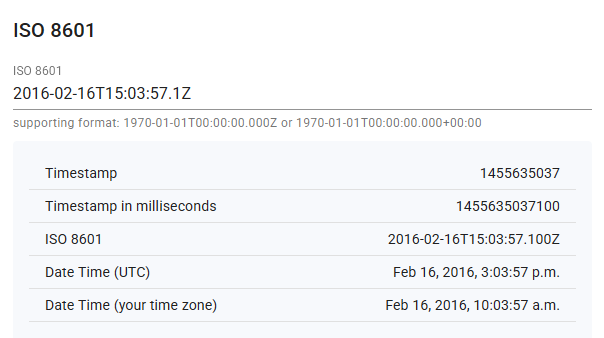

Both should be the same time. Here’s some supporting external validation: