Over the past week, I’ve been dealing with the fallout of bumping our JUCE version from 7.0.5 to 8.0.3. I’ve since had two forum posts (here and here) that I started while I was attempting to track down the source of the issues my team was having, but treating problems addressed in those threads has not wholly resolved our issue of multiple plugin instances freezing the DAW.

For background, here’s the biggest cases I’ve found where calling into Direct2D is prohibitively slow:

- As mentioned in my previous forum post,

ColourGradientinstantiation is incredibly expensive and there is no user-side way to optimize it aside from just using fewer gradients - Contexts created using

Graphics(const Image&)always perform a GPU-to-CPU image read which is prohibitively expensive- I’m aware this is done to keep the image intact in the event of GPU disconnects, but it should be configurable as the overhead is not worth the caching in our case

applyPendingClipListcalls after path fill/stroke methods andComponent::paintInParentContext- May be related to the

SavedStateissues I’ve seen referenced in the forum.

- May be related to the

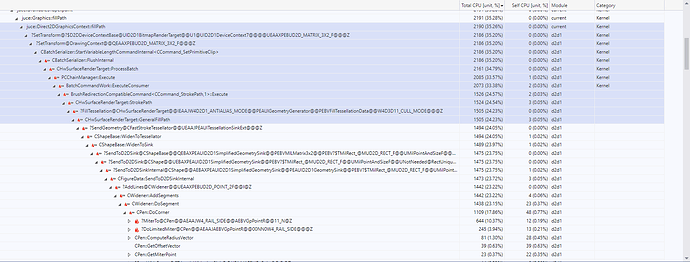

Context flush on certain path operations (see screenshot):This may not be a context flush, but instead just a method that’s namedFlushInternal

For the reasons listed above, I’d like to request a flag to disable Direct2D entirely at compile time. I understand that most of these can be addressed by switching the render engine via ComponentPeer, but the fact that juce::Image will create graphics contexts using the native renderer regardless of what renderer the peer is using results in an irrecoverable breaking change on our end. In most cases this can be solved through optimization, but it would be nice to get some of the benefits of JUCE 8 without needing to perform an optimization pass on a large codebase just to accommodate D2D.

Our team’s code is proprietary, but I’d gladly share my profiling results with the JUCE team privately if it would aid in improving D2D performance.

For reference, the machine I’ve been profiling on has the following specificaitons:

CPU: AMD Radeon 5800X

GPU: NVIDIA 3090 Ti

RAM: 32GB DDR4

MoBo: MSI Mag X570S Tomahawk Max Wifi