Maybe I’m lazy, but I see no reason not to use JUCE::Thread class with ‘startRealtimeThread’ - since it creates the necessary parameters based on a single miliseconds value.

I just set the expected process time to the value in ms based on the samplerate & blocksize (experts chime in please and tell me if this is bad - seems to work ok).

e.g.

int processTimeMaxMs = std::round((1000.0f/sampleRate) * bufferSize);

bool success = startRealtimeThread(RealtimeOptions{10, static_cast<uint32_t>(processTimeMaxMs)});

(Maybe would be better if the JUCE API uses a float value for the duration input.)

If your thread pool is for real-time processing, then no need to measure anything IMO - if your thread pool functions take longer than the available time in the callback then there is going to be a problem anyway (could probably even set this max time to half the callback time). I believe the main point is to give the OS the appropriate cue as to how to balance priorities vs all other system threads based on the expected time (and keep all those workgroup threads at the same priority) - the main thing is to be sure to specify a time that is longer than your threads take to process (otherwise the OS will demote them possibly), but small enough to keep the priority elevated vs other system threads.

You just have to remember, that any time the samplerate or blocksize changes, you need to delete and re-create all your pool threads (to register the new process max time) - it should be OK - since you can do that in ‘prepare to play’ (better not do in the audio callback itself). (Do the same if the HW device selection changes too - since workgroup will likely change. Here’s where I still have a problem with VST3 - maybe it will be possible to retrieve the workgroup from the main audio callback thread - something I need to investigate.)

If for some reason you need to change number of threads during normal audio operation, it’s best to disable real-time multithreaded calculations and maybe mute audio and then launch a thread pool re-allocation as an async function (un-registering threads from their assigned workgroup when they are stopped). This is how I’m doing it. I would advise against creating the threadpool threads anywhere other than in prepare to play/device change or an async function outside of the main audio callback.

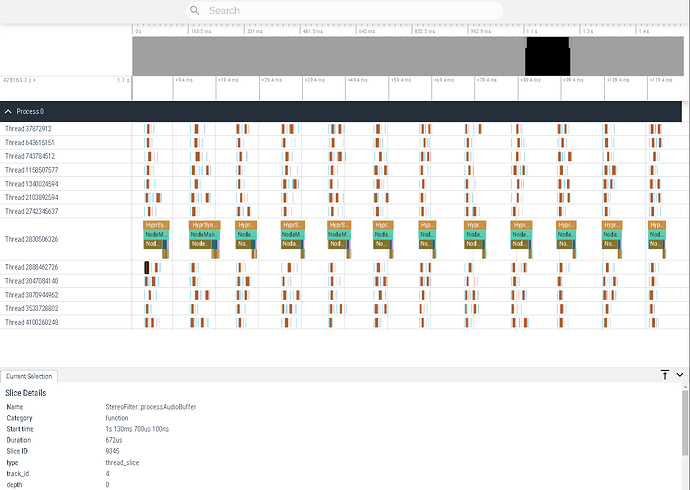

One thing to do maybe is to add some instrumentation code (use a build option to remove from final release) that can record timestamps of the main audio-callback start/end and timestamps of the threadpool threads start/end (or deeper yet, the individual audio process functions)… I used Perfetto to draw some traces for each real-timeworker thread from a log created this way - https://ui.perfetto.dev/ it was extremely useful to validate my thread pool was working as expected - maybe the built in Xcode debugger can also provide this visibility, but using my own log I can keep traces simple and only record information for the specific threads/functions I want to track.

(see Plugin editor window slow to open (since JUCE 6.1 possibly?) - #8 by wavesequencer for some example log screenshots)

When creating a threadpool, it may be best to try to limit to the max logical cores (there’s a JUCE function to get that value)… if you have more tasks than threads, your thread processing handler just needs to pull jobs from a queue and do as many jobs as it can each loop (I’m also pulling jobs in the main callback rather than just have it sit around waiting for the threadpool handler to complete jobs).

I don’t always follow that rule though - but seems to be OK to create more threads than logical cores - either there’s going to be some time slicing of threads by the OS, or you have to loop your thread pool process as many times as necessary to clear jobs - overall process time should be similar if your threads do similar jobs… the exact strategy here also depends on if you can predict the number of threads you need vs the number of logical cores available…

It might actually be better to create as many threads as required for parallel jobs and let the OS time-slice/queue their processing as it sees fit - however I don’t know if Mac or Windows would penalize an application that asks for too many threads (and what ‘too many’ actually is) - and if there is a limit there, there is also the idea to run parallel real-time audio tasks as separate processes… but I really don’t want to go there.

There is for sure some system overhead to synchronising threads, and that may become too high as a percentage of available time with really short buffer sizes… I find I can get down to a buffer size of 128 without issues though.

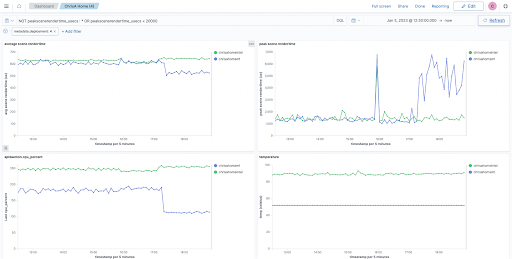

More profiling required to validate all this theorizing.

Edit - here’s a better screenshot of Perfetto ui for visualising a realtime audio thread pool threads log in my synth - just for example - this was probably running in debug mode at the time:

Edit 2 - I saw some mention in stack overflow that max threads a process can request on Mac OS could be either 4096 or 8192 (depending on OS version/silicon)… maybe there’s something specific in Apple documentation about it - could not find in a quick search - I wouldn’t be surprised if they don’t publish it in a obvious place.

Max threads my synth engine will ever request is logical cores * 16 + logical cores… so on Mac M1 Air that would be 136… that is in the worst case scenario… typically it’s running between 32 to 64 threads depending on the patch/layers - reallocating any time the voice counts/layers change.