Hi,

I’m developing a modular synthesizer and I’m so far that I can dynamically build a complete signal path (osc->env->filter,…). But now I want to be able to generate multiple voices.

Now I’m thinking if I have to duplicate the complete signal path per voice. So per voice all modules (osc, env…) must be instantiated and connected. Or is it enough if I have e.g. only one oscillator per voice and send the outputs into the remaining signal path (1 time available)?

Greetings

Screenshot: Imgbly — Image - UeJJCpN18R

If you share the signal path for all the voices you won’t be able to use common modulation sources like velocity and note number.

Yes true. Env would also be re-triggered again. Too bad. I thought that I could simplify this.

Thank you

Some Instruments like the Korg Poly 800 have multiple Oscillators, but only one Filter, which is shared between all of the voices. This is clearly a compromise, and it means that all voices share the same filter ADSR. But it’s a legitimate arrangement if that’s what you choose to do.

One way of handling this is to make your actual modules polyphonic. So take the oscillator for example, make it handle up to ‘n’ internal oscillators rather than just one. It’ll have to emit the outputs for all oscillators too as they’ll need to hit subsequent modules independently.

‘env’ I’d handle a little differently. Normally I’d say a synth architecture is osc->filter->amp and env is a modulator on gain within amp. Again, if you’re wanting polyphony though you’ll have a number of env states to represent the voices.

So at the end of this chain you’ll have a number of active voices, all independently processed, that you mix to produce the final audio output.

Note, this might be going against your generally modular rack style implementation but…there’s a reason why most hardware modular setups are monophonic and it’s really expensive to go polyphonic. Depends how authentic you want to be.

Hi!

Most synth architectures try to keep the polyphonic part small and efficient, combining it with global components, while other architectures use an “instantiate everything” approach, like the Access Virus. Both are valid, but if you want performance, you should probably split your architecture into polyphonic and global elements.

If your processing graph supports sub graphs (the graph can contain graphs as nodes), you could use a special type of sub graph that contains a voice allocator. Inside, you’d design the part of the synth architecture that is truly polyphonic, while everything that’s shared is outside. This will require more logic and state than in a purely CV-signal driven architecture. I’d also recommend adding a means of checking the lifecycle of a voice, so you can suspend the voice again once it has finished playing. Since in a free modular architecture this is impossible to deduce automatically, I’d suggest going with the convention of using the state of a special “Voice main VCA envelope” for that. Additional complexity may arise from a need for appropriate voice stealing and reset behaviour.

You can’t control the voice allocator with pitch and gate signals, since you can’t associate those with particular voices. You could use MIDI input and track note on/off on particular note numbers. You could also try MPE and associate voices with midi channels.

To go forward, I’d suggest a divide and conquer approach. First create a voice allocator around the architecture that you currently have, for figuring out solutions for the challenges arising from voice allocation alone. Preallocating voices up to a configurable max polyphony may be a good idea to keep the processing itself allocation free.

Once this works, you “only” need a solution for including your engine, including the voice allocator, into itself. A rack module called “ModSynth” in the UI, and a way to edit the voice-graph contained within. That sub-graph can ideally define named inputs and outputs that you can connect from the outer graph, e.g. a CV input for modulation, that you can route a “global” LFO into.

This “nesting” approach is extremely powerful for all kinds of things, not only voice allocation. You’ll have support for reusable processing chains that you can use like a single module, making complex architectures easier to build and edit. You could do drum-maps that associate different sub graphs with different notes. And so on

Have fun!

SynthEdit has entered the chat

…or any other feature rich graph node based system. pd, maxmsp, reaktor, etc.

1 Like

I would prefer a limited polyphony of 8 or 16 voices with its own character (different filter frequency, modulation rate, or envelope times, according to its note number, velocity or frequency). although I don’t know how computationally expensive this would be in a modular. I suppose it would require using SSE or AVX extensions

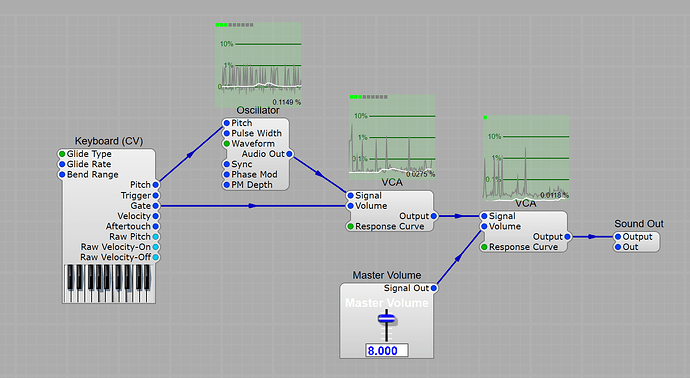

Here is a rough illustration of how to determine which modules should be polyphonic.

This is a simple instrument where a voice consists of two plugins, an Oscillator and an Amplifier (VCA).

The green area above each module are diagnostics. The green dots represent voices. i.e. there are 9 instances of each voice, and three voices are currently playing.

So in this example, we have instantiated 9 instances of the oscillator’s processor, and the same for the leftmost VCA.

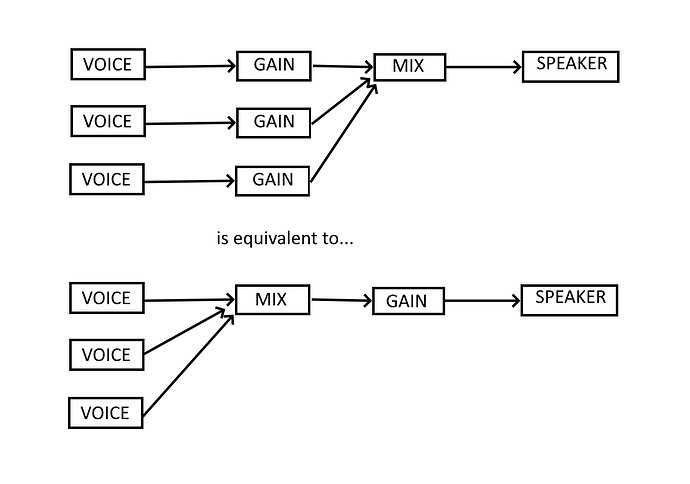

The interesting part is that there is a second VCA in the signal chain acting as a master volume, but it’s not polyphonic (it has only a single green dot). The algorithm has determined that it’s less work to mix together all the voices, and then apply the gain, than to do it the other way round.

Both VCA are using the same type of plugin, so there is nothing particularly ‘smart’ in their implementation. The reason that the leftmost one is chosen to be polyphonic is that its volume is controlled by a polyphonic signal, the keyboards ‘Gate’ (which signals that ‘a key is down’). Wheras the second VCA is controlled by a simple (not-polyphonic) parameter.

There are a few details I skipped for simplicity, but I hope that makes sense.

“The algorithm has determined” - that’s the tricky part

But yeah, automatically figuring out the subgraphing, instancing, voice lifecycles and so on from the graph and the routing makes for convenient UX when creating synths in a graph editor.

I lean towards making this architecture explicit and controllable, because a user who’s not careful can quickly ruin performance by accidentally creating something that requires too many instances of demanding modules. Plus, sometimes the desired split between poly and non-poly is not all that clear. A LFO could be global or per voice. Old transistor organs often have a global attack-decay envelope in the summing stage.